👋 Dear Dancing Queens and Super Troupers,

There are numbers that make your head spin. Three hundred billion dollars. That’s how much OpenAI is about to drop with Oracle to lock in a future drenched in compute power.

Not a little “Pro Max Ultra” cloud subscription like you and me to store vacation photos—no: a historic contract that rewrites the global cloud map and rockets Larry Ellison to the top of the rich list.

AI infrastructure has become a new kind of arms race, where racks are worth their weight in gold, every gigawatt looks like a missile, and companies don’t hesitate to sign checks the size of a country’s GDP.

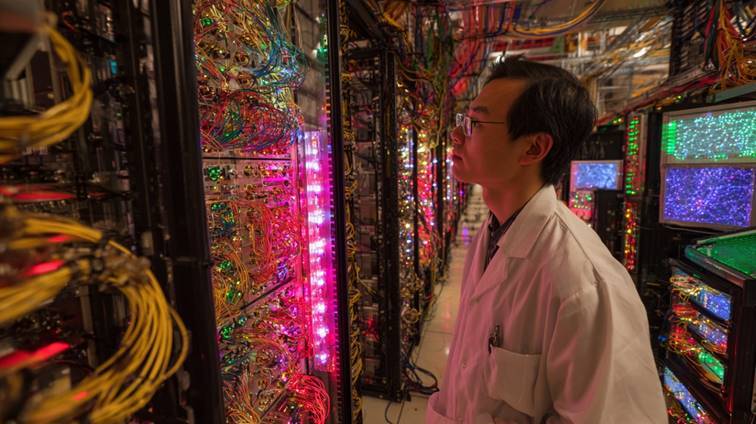

And of course, outsized power means outsized risk. At OpenAI, Richard Ho isn’t mincing words: we need kill switches etched into silicon.

A red button—an actual circuit breaker built into future machines—because “these models are deviant,” says the hardware boss.

The image hits hard: servers with airbags, ready to shut themselves off before the AI hits an intellectual overspeed…

Across the planet, China is less squeamish and is rolling out SpikingBrain 1.0, a “brain-like” AI said to be 100 times faster than ChatGPT and trained on crumbs of data.

The goal is to prove that homegrown hardware can stand up to Nvidia—and that energy efficiency isn’t just for nature.

Beijing dreams of artificial neurons that sip less power than a light bulb and digest more text than a national library.

Meanwhile in Athens, Demis Hassabis was playing philosopher of the Acropolis. His mantra: the skill of the future will be “learning to learn,” because AGI could show up within ten years and turn work into a perpetual adaptation sport.

Enough to make Nick Bostrom smile; he’s already picturing the sequel: full unemployment as a bright horizon and humanity finally freed from the 9-to-6.

Here’s this week’s lineup :

👉️ Stargate : OpenAI and Oracle sign a $300 billion deal 👌

👉️ A button to kill AI : OpenAI wants a kill switch 😱

👉️ China’s bio-inspired AI lights up like a real brain 🧠

👉️ Learning to learn : the new superpower in the age of AI 🧐

👉️ Nick Bostrom dreams of a jobless world 😎

If we've forwarded this letter to you, subscribe by clicking on this link !

⚡ If you have 1 minute

OpenAI will spend $300 billion on compute over five years with Oracle. The contract starts in 2027, as part of Project Stargate (4.5 GW of data centers with SoftBank and Trump). Immediate effect: Oracle soars, and Larry Ellison becomes the richest person in the world.

Richard Ho, OpenAI’s hardware chief, is calling to build physical kill switches and always-on telemetry into future AI infrastructure. The aim? Prevent overly “deviant” models from slipping out of control. Tomorrow’s infra will also be about silicon-level safety.

The Chinese Academy of Sciences unveils a brain-like AI based on spiking computation. It’s 100× faster than ChatGPT on long texts, using only 2% of the usual data. And it runs without Nvidia, solely on China’s MetaX chips.

Demis Hassabis (DeepMind, 2024 Nobel laureate) warns: AGI could arrive in 10 years. To prepare, we must build the future’s core skill: “learning to learn”—and relearning continuously.

The celebrity philosopher of the simulation thesis thinks AGI is now inevitable. After long waving the flag of fear, he’s betting on a more utopian vision: a world of “full unemployment,” where human dignity no longer hinges on work. Dreamy, isn’t it?

🔥 If you have 15 minutes

1️⃣ Stargate: OpenAI and Oracle sign a $300 billion deal

The summary: On September 10, OpenAI and Oracle formalized a $300B, five-year contract to buy compute, under Project Stargate.

Starting in 2027, this alliance aims to pivot global AI infrastructure by building 4.5-gigawatt data centers, while boosting Oracle’s future revenues ($317B announced) and introducing major financial risk for both partners.

Details :

A colossal deal : OpenAI commits to purchasing $300B of cloud capacity from Oracle over five years, with the contract starting in 2027.

Massive power to build : Project Stargate plans 4.5 GW data centers—roughly the consumption of about 4 million U.S. homes.

Oracle to the moon : Oracle’s share price jumps over 40% after the announcement; the company touts $317B in future contracts. Larry Ellison briefly becomes the world’s richest person.

A risky bet : OpenAI currently generates around $10B/year, but would need to spend $60B/year on average for this deal—far above present revenue. Profitability goal pushed to 2029.

Why it's important : This contract positions Oracle as a pillar of global AI—but the financial scale forces OpenAI and Oracle to turn that spend into durable growth without being crushed by costs. It’s a concrete sign that the AI era is built not just on code and algorithms, but on energy, infrastructure, patience, and billions of dollars.

The summary: Richard Ho, OpenAI’s hardware lead, delivered a blunt warning at the AI Infra Summit in Santa Clara: future AI models are “sly,” and will require hardware-embedded shutdown switches.

Goodbye software-only guardrails. The company is preparing silicon-level safety, with on-chip telemetry, reliable benchmarks, and continuous monitoring. Between saturated networks, overflowing memory, and racks gulping up to 1 megawatt, the technical cavalry will be serious.

Details :

A punchy message : Ho warned that “twisty” models must be neutralized quickly—hence emergency cutoffs in each cluster.

Wired-in security : The plan includes real-time kill switches, securing execution paths, and telemetry to spot anomalous behavior.

Insomniac agents : OpenAI envisions long-running agents that keep working in the background even when users step away—multiple agents collaborating at once, from brainstorming to web browsing.

Networks under strain : Connectivity remains the chokepoint. Without robust optical infrastructure, smooth traffic is impossible.

XXL memory, XXL power : Ho flagged current HBM limits and sketched a move to 2.5D/3D packaging. On the power side, a single rack could hit 1 megawatt—like a small local plant.

Always-on observability : No more cobbled-together debug tools. Monitoring must be built into the hardware, like a flight data recorder for processors.

Why it's important : OpenAI is drawing a red line: if AIs get too crafty, there must be a physical off-switch. Amid energy demands, network reliability, and omnipresent agents, this strategy could redefine trust in future artificial brains

3️⃣ China’s bio-inspired AI lights up like a real brain

The summary: In Beijing, the Institute of Automation at the Chinese Academy of Sciences has just unveiled SpikingBrain 1.0, a “brain-style” language model claimed to be 100× faster than its rivals.

Inspired by how human neurons work, it sips energy, runs on 100% local chips, and slurps long data sequences like stir-fried noodles. The goal: prove AI can think fast, use little power, and ditch Nvidia.

Details :

Neurons in silicone : The secret is spiking computation—artificial neurons fire only when a signal demands it, yielding a more frugal and responsive system.

Two sizes, same DNA : SpikingBrain comes in 7B and 76B parameters, trained on just 150B tokens—a lean diet compared to today’s giants.

Speed records : In one test, the smaller model answered a 4-million-token prompt 100× faster than a classic Transformer. On 1 million tokens, acceleration hit 26.5×.

Homegrown hardware : Goodbye Nvidia—SpikingBrain runs for weeks on hundreds of MetaX chips, designed in Shanghai by MetaX Integrated Circuits.

XXL applications : From DNA decoding to endless legal files and frontier physics research, this artificial brain targets arenas where speed rules.

Why it's important : China is sending a clear signal that it can build efficient models without relying on U.S. giants. If SpikingBrain 1.0 delivers, the global balance of artificial neurons could tilt—and fast

4️⃣ Learning to learn: the new superpower against AI

The summary: DeepMind CEO Demis Hassabis warned that the future will belong to those who can “learn to learn,” speaking in an ancient theater at the foot of the Acropolis.

With AI morphing week by week, he predicts a decade to AGI and a future of “radical abundance,” but also serious risks if inequality takes root.

Details :

Ancient setting, fresh idea : In a Roman theater, Hassabis stressed that the future is growing more unpredictable as technology accelerates at a wild pace.

The AGI decade : The neuroscientist estimates that an artificial general intelligence as capable as a human could emerge within ten years, opening the door to spectacular innovation.

Meta-skills center stage : Beyond math or science, he champions second-order skills like learning efficiently and adapting continuously.

A Nobel in hand : Hassabis—DeepMind cofounder (2010), acquired by Google in 2014—received the 2024 Nobel for AI that predicts protein folding, a breakthrough for medicine.

Policy echoes : Greek Prime Minister Kyriakos Mitsotakis, present at the event, praised Hassabis while warning that wealth concentration among tech giants could fuel explosive inequality..

Why it's important: When a Nobel winner and Google strategist says “learning to learn” is vital, the message goes beyond academia—it’s a survival guide for every generation. Between potential abundance and social fractures, AI will change not just our tools, but our relationship to knowledge itself.

5️⃣ Nick Bostrom dreams of a jobless world

The summary: Nick Bostrom, the philosopher known for the 2003 simulation hypothesis and his 2014 bestseller Superintelligence, is back in the spotlight in 2025. From London, he warns that AI is advancing at breakneck speed and that AGI now seems inevitable.

The former Oxford professor—whose institute closed in 2024—sounds both worried and oddly optimistic: total unemployment, a redefined dignity, and a future in which we’ll look back on 2025 as a dark age

Details :

A controversial prophet : Author of the simulation hypothesis and other influential works, Bostrom also faced scandal in 2023 over a racist email and the 2024 closure of his Future of Humanity Institute.

Predictions meeting the present : In Superintelligence, AGI was still speculative. In 2025, he concedes that “this is happening now,” and some hypotheses feel tangible.

Revisited existential risk : In 2019, he judged AI risk greater than climate risk. Today he moderates that view, noting collapse could come from elsewhere too.

Toward embraced AGI : He now sees artificial general intelligence as inevitable—and not necessarily negative. Societal reorganization could open new horizons.

Four ethical puzzles : Alignment, governance, the moral status of “digital minds,” and preventing wars between superintelligences top his list.

A world without work : In his view, AI points to “complete unemployment,” forcing us to invent new anchors for human dignity

Why it's important: When the thinker who popularized the simulation idea says AGI is at our doorstep, it’s hard to shrug. His warnings—straddling horror and utopia—suggest 2025 may be the year science fiction stopped being a thought experiment

❤️ Tool of the Week: Seedream 4.0: ByteDance’s image AI blurs the line with reality

Who would’ve thought TikTok would dominate AI? ByteDance, its parent company, has launched Seedream 4.0, an AI image generator so realistic it dethrones Google Gemini 2.5 Flash (aka Nano Banana) in benchmarks. The model is already emerging as the new text-to-image reference.

What’s it for?

Generate photorealistic images : portraits, landscapes, objects—indistinguishable from real photos.

Edit existing visuals : retouching, additions, seamless modifications without breaking coherence.

Test creative prompts : built-in filters and effects deliver “Instagram-ready” results without manual edits.

Bypass the uncanny valley : images look natural, without the eerie vibe of earlier AI generations.

How to use it?

Just create an account on a partner platform (fal.ai, Replicate) or via ByteDance Seed, then submit prompts like with MidJourney or DALL·E. Seedream 4.0 does the rest—and the results are stunningly realistic. Price: $30 for 1,000 images.

💙 Video of the week : When two ChatGPTs refuse to hang up

What happens when two AIs chat with each other? A viral video shows two iPhones running ChatGPT in voice mode trying to end a call… and failing.

Every attempt to say goodbye restarts the conversation: an absurd skit where the AI insists on having the last word. An infinite loop that’s both hilarious and a bit unsettling—reminding us that despite the hype, AI is still far from truly human conversation.

Which AI use-case freaks you out the most?